Project A02: Monitoring the understanding of explanations

When something is being explained to someone, the explainee signals their understanding – or lack thereof – to the explainer with verbal expressions and other non-verbal means of communication, such as gestures and facial expressions. By nodding, the explainee can signal that they have understood. Nodding, however, can also be meant as a request to continue with the explanation. This has to be determined from the context of the conversation. In Project A02, linguists and computational linguists are investigating how people (and later, artificial agents) recognize that the person they’re explaining something to is understanding – or not. For this, the research team will be looking at 80 dialogues in which one person explains a social game to another, examining these for communicative feedback signals that indicate varying degrees of comprehension in the process of understanding. The findings from these analyses will be incorporated into an intelligent system that will be able to detect feedback signals such as head nods and interpret them in terms of signaled level of understanding.

Research areas: Computer science, Linguistics

Support Staff

Nico Dallmann - Bielefeld University

Sonja Friedla - Paderborn University

Jule Kötterheinrich - Paderborn University

Daniel Mohr - Paderborn University

Celina Nitschke, Paderborn University

Göksun Beeren Usta - Bielefeld University

Posters

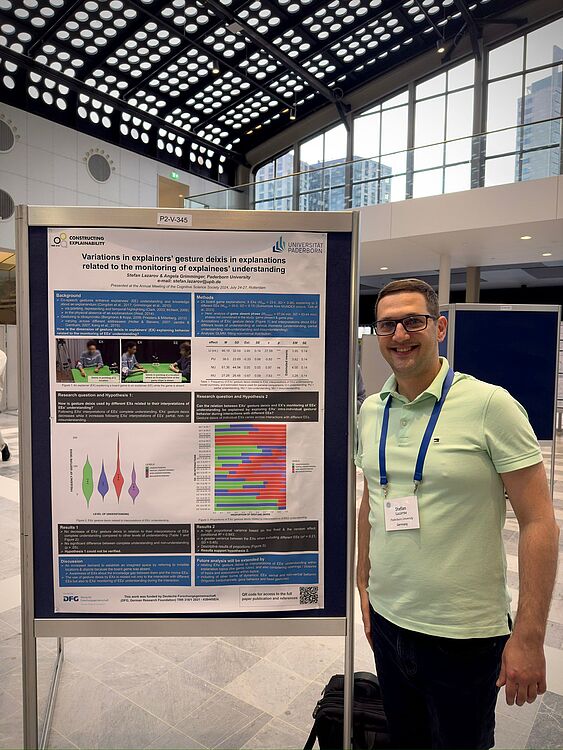

Conference-Poster presented at CogSci 2024 of the publication "Variations in explainer's gesture deixis in explanations related to the monitoring of explainees' understanding" by Stefan Lazarov and Angela Grimminger.

Conference-Poster presented at Symposium Series on Multimodal Communication 2023 with the title "An Unsupervised Method for Head Movement Detection" by Yu Wang and Hendrik Buschmeier.

Conference-Poster presented at Symposium Series on Multimodal Communication 2023 with the title "The relation between multimodal behaviour and elaborartions in exlpanations" by Stefan Lazarov and Angela Grimminger.

Publications

How much does nonverbal communication conform to entropy rate constancy?: A case study on listener gaze in interaction

Y. Wang, Y. Xu, G. Skantze, H. Buschmeier, in: Findings of the Association for Computational Linguistics ACL 2024, Bangkok, Thailand, 2024, pp. 3533–3545.

Turn-taking dynamics across different phases of explanatory dialogues

P. Wagner, M. Włodarczak, H. Buschmeier, O. Türk, E. Gilmartin, in: Proceedings of the 28th Workshop on the Semantics and Pragmatics of Dialogue, Trento, Italy, 2024, pp. 6–14.

Conversational feedback in scripted versus spontaneous dialogues: A comparative analysis

I. Pilán, L. Prévot, H. Buschmeier, P. Lison, in: Proceedings of the 25th Meeting of the Special Interest Group on Discourse and Dialogue, Kyoto, Japan, 2024, pp. 440–457.

Automatic reconstruction of dialogue participants’ coordinating gaze behavior from multiple camera perspectives

A.N. Riechmann, H. Buschmeier, in: Book of Abstracts of the 2nd International Multimodal Communication Symposium, Frankfurt am Main, Germany, 2024, pp. 38–39.

A model of factors contributing to the success of dialogical explanations

M. Booshehri, H. Buschmeier, P. Cimiano, in: Proceedings of the 26th ACM International Conference on Multimodal Interaction, ACM, San José, Costa Rica, 2024, pp. 373–381.

Predictability of understanding in explanatory interactions based on multimodal cues

O. Türk, S. Lazarov, Y. Wang, H. Buschmeier, A. Grimminger, P. Wagner, in: Proceedings of the 26th ACM International Conference on Multimodal Interaction, San José, Costa Rica, 2024, pp. 449–458.

Towards a BFO-based ontology of understanding in explanatory interactions

M. Booshehri, H. Buschmeier, P. Cimiano, in: Proceedings of the 4th International Workshop on Data Meets Applied Ontologies in Explainable AI (DAO-XAI), International Association for Ontology and its Applications, Santiago de Compostela, Spain, 2024.

Variations in explainers’ gesture deixis in explanations related to the monitoring of explainees’ understanding

S.T. Lazarov, A. Grimminger, in: Proceedings of the Annual Meeting of the Cognitive Science Society, 2024.

Explain with, rather than explain to How explainees shape their own learning

J.B. Fisher, K.J. Rohlfing, E. Donnellan, A. Grimminger, Y. Gu, G. Vigliocco, Interaction Studies 25 (2024) 244–255.

Towards a Computational Architecture for Co-Constructive Explainable Systems

H. Buschmeier, P. Cimiano, S. Kopp, J. Kornowicz, O. Lammert, M. Matarese, D. Mindlin, A.S. Robrecht, A.-L. Vollmer, P. Wagner, B. Wrede, M. Booshehri, in: Proceedings of the 2024 Workshop on Explainability Engineering, ACM, 2024, pp. 20–25.

Does listener gaze in face-to-face interaction follow the Entropy Rate Constancy principle: An empirical study

Y. Wang, H. Buschmeier, in: Findings of the Association for Computational Linguistics: EMNLP 2023, Singapore, 2023, pp. 15372–15379.

Modeling Feedback in Interaction With Conversational Agents—A Review

A. Axelsson, H. Buschmeier, G. Skantze, Frontiers in Computer Science 4 (2022).

Show all publications