Project B03: Exploring users, roles, and explanations in real-world contexts

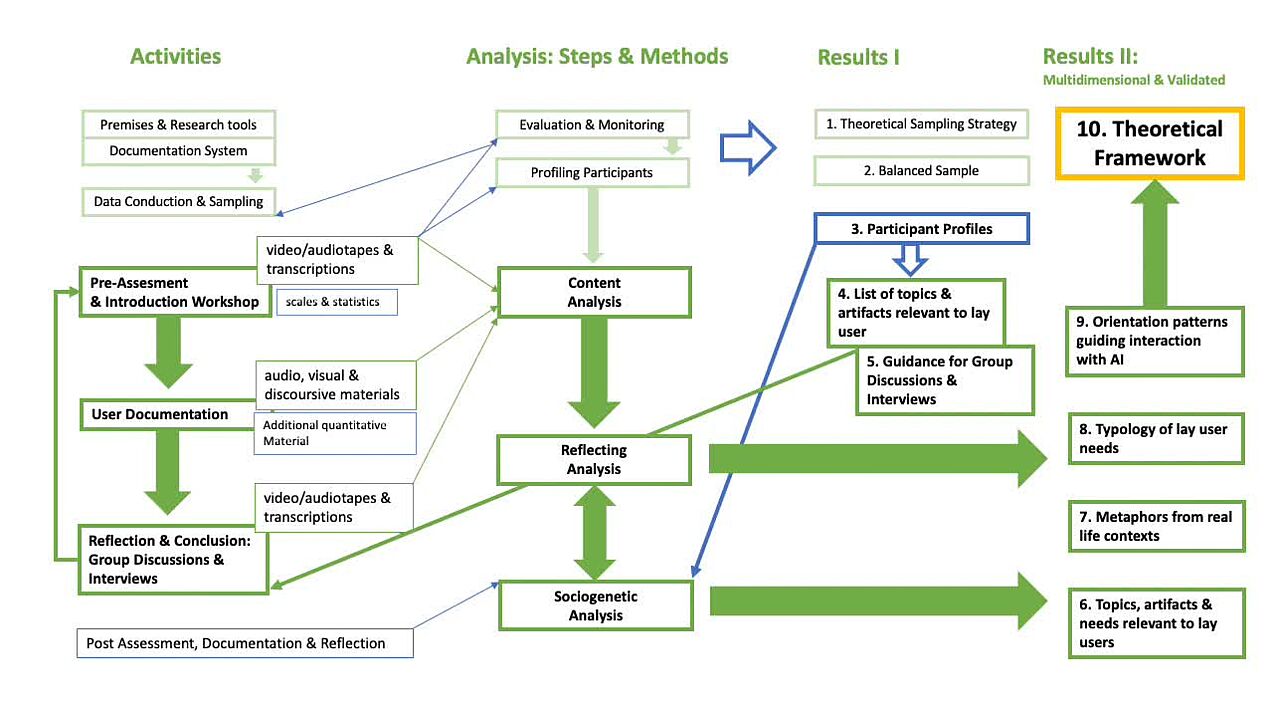

In the first funding phase, project B03 investigated how lay users make sense of Artificial Intelligence (AI) in their everyday lives. Drawing on qualitative data from 63 participants from Germany and the UK, the project analysed everyday experiences with AI technologies through surveys, digital diaries, interviews, and group discussions.

The research highlights how individual context and prior knowledge shape when and why people feel explanations of AI are necessary. Users often rely on non-technical knowledge to interpret AI, and political and social considerations often play a greater role than technical aspects.

The project concludes that explainable AI (XAI) research must move beyond purely technical solutions to better understand the social processes through which people interpret and evaluate AI systems.

Research areas: Sociology, Media studies

Project duration: 07/2021-12/2025

Former Members

Prof. Dr Ilona Horwath, Project leader

Dr. Christian Schulz, Research associate

Dr. Patricia Jimenez Lugo, Research associate

Lea Biere, Associate member

Josefine Finke, Research associate

Patrick Henschen, Research associate

Publications

Structures Underlying Explanations

P. Jimenez, A.L. Vollmer, H. Wachsmuth, in: K. Rohlfing, K. Främling, B. Lim, S. Alpsancar, K. Thommes (Eds.), Social Explainable AI: Communications of NII Shonan Meetings, Springer Singapore, n.d.

Investigating Co-Constructive Behavior of Large Language Models in Explanation Dialogues

L. Fichtel, M. Spliethöver, E. Hüllermeier, P. Jimenez, N. Klowait, S. Kopp, A.-C. Ngonga Ngomo, A. Robrecht, I. Scharlau, L. Terfloth, A.-L. Vollmer, H. Wachsmuth, ArXiv:2504.18483 (2025).

Investigating Co-Constructive Behavior of Large Language Models in Explanation Dialogues

L. Fichtel, M. Spliethöver, E. Hüllermeier, P. Jimenez, N. Klowait, S. Kopp, A.-C. Ngonga Ngomo, A. Robrecht, I. Scharlau, L. Terfloth, A.-L. Vollmer, H. Wachsmuth, in: Proceedings of the 26th Annual Meeting of the Special Interest Group on Discourse and Dialogue, Association for Computational Linguistics, Avignon, France, n.d.

Forms of Understanding for XAI-Explanations

H. Buschmeier, H.M. Buhl, F. Kern, A. Grimminger, H. Beierling, J.B. Fisher, A. Groß, I. Horwath, N. Klowait, S.T. Lazarov, M. Lenke, V. Lohmer, K. Rohlfing, I. Scharlau, A. Singh, L. Terfloth, A.-L. Vollmer, Y. Wang, A. Wilmes, B. Wrede, Cognitive Systems Research 94 (2025).

Vernacular Metaphors of AI

C. Schulz, A. Wilmes , in: ICA Preconference Workshop “History of Digital Metaphors”, University of Toronto, May 25 , n.d.

(De)Coding social practice in the field of XAI: Towards a co-constructive framework of explanations and understanding between lay users and algorithmic systems

J. Finke, I. Horwath, T. Matzner, C. Schulz, in: Artificial Intelligence in HCI, Springer International Publishing , Cham, 2022, pp. 149–160.

(De)Coding Social Practice in the Field of XAI: Towards a Co-constructive Framework of Explanations and Understanding Between Lay Users and Algorithmic Systems

J. Finke, I. Horwath, T. Matzner, C. Schulz, in: Artificial Intelligence in HCI, Springer International Publishing, Cham, 2022, pp. 149–160.

Show all publications