Developing Explanations Together

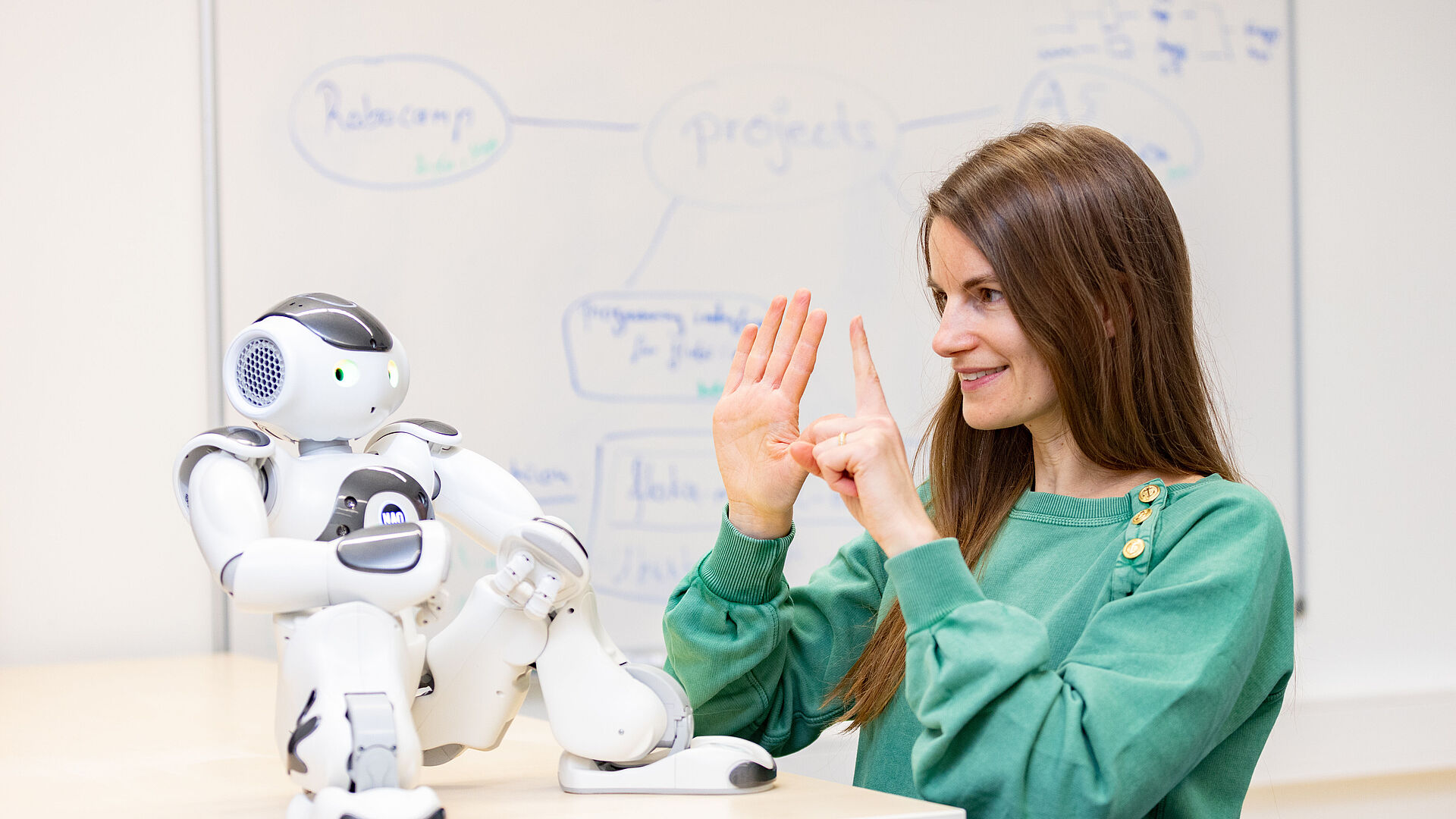

Algorithm-based approaches, such as machine learning, are becoming increasingly complex. This lack of transparency only makes it more difficult for human users to understand and accept the decisions proposed by artificial intelligence (AI). In the Transregional Collaborative Research Center 318 “Constructing Explainability”, researchers are developing ways to involve users in the explanation process and thus create co-constructive explanations. Therefore, the interdisciplinary research team investigates the principles, mechanisms, and social practices of explaining and how these can be taken into account in the design of AI systems. The goal of the project is to make explanatory processes comprehensible and to create understandable assistance systems.

News

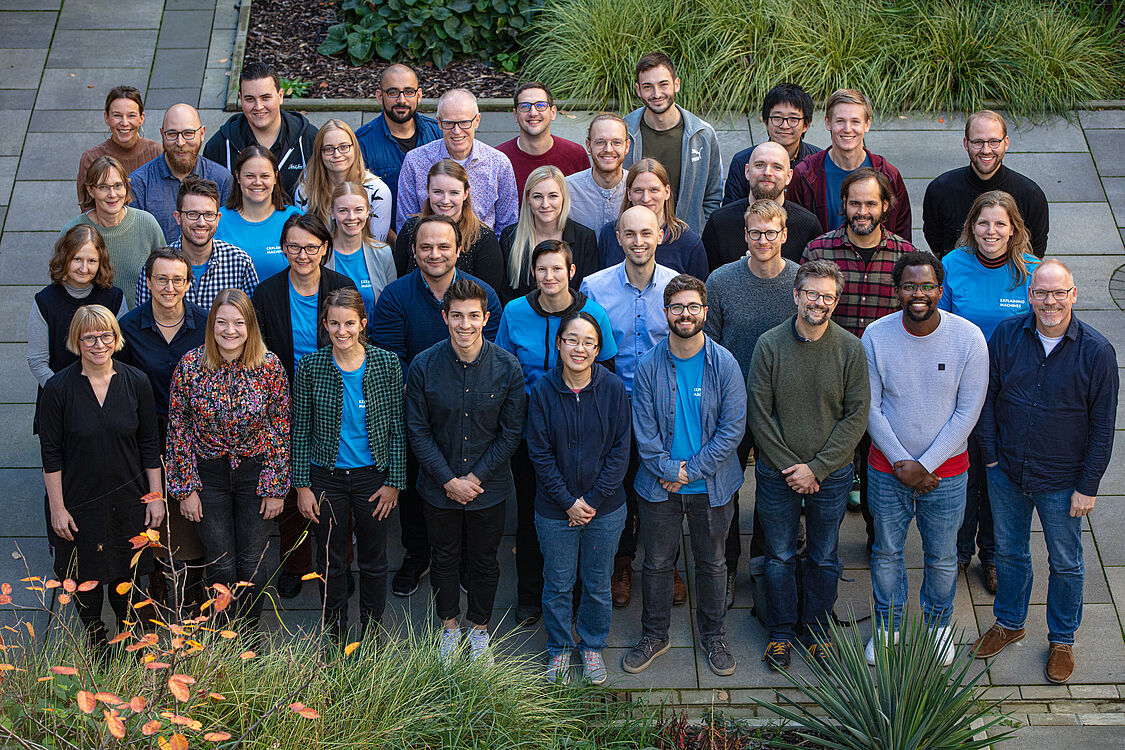

Members

The co-construction of explanations is investigated by a total of 22 project leaders with about 40 research assistants from computer science, economics, linguistics, media science, philosophy, psychology and sociology at the Bielefeld and Paderborn universities.

Continue reading: