Project C01: Explanations for healthy distrust in large language models

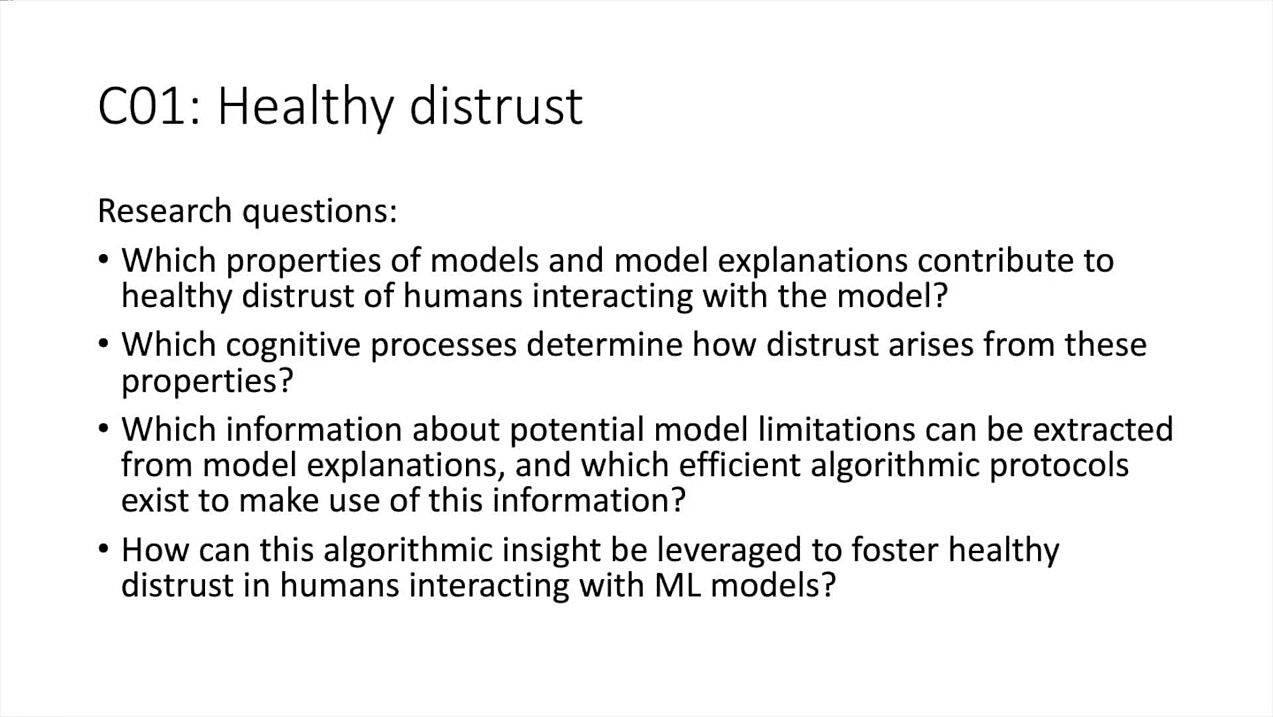

Machine learning models have limitations. That is why it is important to critically question the results of applications based on large language models (LLMs) such as ChatGPT instead of trusting them blindly. In the first funding phase of the project, a common language for trust and distrust was developed. A study also showed that simple warnings (disclaimers) are not sufficient to promote a healthy level of skepticism. Therefore, the researchers from psychology and computer science developed new approaches to explain model uncertainties in an understandable way. Building on this, the project team is now developing targeted measures to promote healthy distrust, especially when LLMs are used as support in scientific writing. New forms of explanations, dubbed “perplexing explanations,” play a central role in this. These explanations are intended to show the unreliability of LLMs to students and will be used as an automatic method in the TRR 318 to strengthen human autonomy in dealing with AI systems.

Research areas: Computer science, Psychology

Support Staff

Valeska Behr, Paderborn University

Oliver Debernitz, Paderborn University

Former Members

Roelof Visser, Research associate

Publications

Assessing healthy distrust in human-AI interaction: interpreting changes in visual attention

T.M. Peters, K. Biermeier, I. Scharlau, Frontiers in Psychology 16 (2026).

Trust, distrust, and appropriate reliance in (X)AI: A conceptual clarification of user trust and survey of its empirical evaluation

R. Visser, T.M. Peters, I. Scharlau, B. Hammer, Cognitive Systems Research (2025).

Interacting with fallible AI: Is distrust helpful when receiving AI misclassifications?

T.M. Peters, I. Scharlau, Frontiers in Psychology 16 (2025).

Explaining Outliers using Isolation Forest and Shapley Interactions

R. Visser, F. Fumagalli, E. Hüllermeier, B. Hammer, in: Proceedings of the European Symposium on Artificial Neural Networks (ESANN), 2025.

The Importance of Distrust in AI

T.M. Peters, R.W. Visser, in: Communications in Computer and Information Science, Springer Nature Switzerland, Cham, 2023.

“I do not know! but why?” — Local model-agnostic example-based explanations of reject

A. Artelt, R. Visser, B. Hammer, Neurocomputing 558 (2023).

Explaining Reject Options of Learning Vector Quantization Classifiers

A. Artelt, J. Brinkrolf, R. Visser, B. Hammer, in: Proceedings of the 14th International Joint Conference on Computational Intelligence, SCITEPRESS - Science and Technology Publications, 2022.

Model Agnostic Local Explanations of Reject

A. Artelt, R. Visser, B. Hammer, in: ESANN 2022 Proceedings, Ciaco - i6doc.com, 2022.

Show all publications