Project B05: Co-constructing explainability with an interactively learning robot

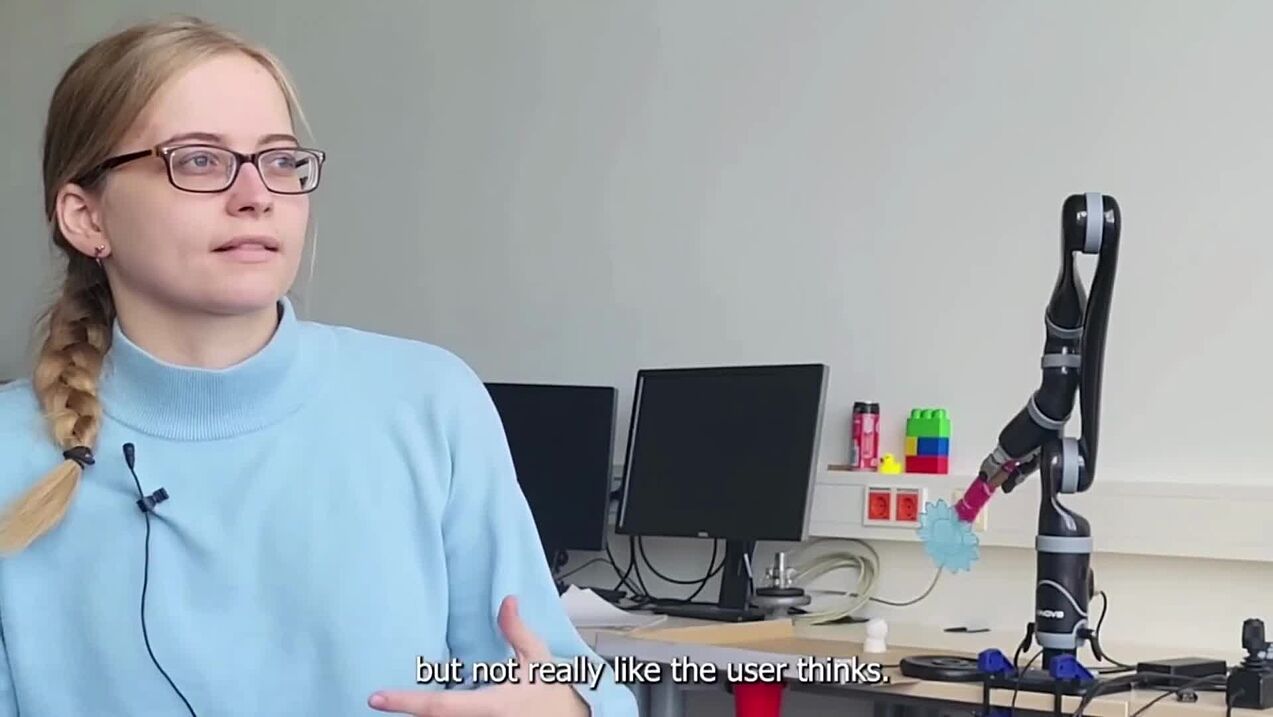

In Project B05, researchers from the field of computer science are exploring non-verbal explanations between humans and machines. A robot is tasked to learn an action by interacting with a human, such as a specific movement. Misunderstandings can arise during this process because human users often do not know how robots acquire skills – is the robot’s direction of gaze important, or are there other factors that influence machine learning?

Researchers on this project are investigating study participants’ perceptions of how a robot works and are developing visualizations that can be used to improve users' understanding of the robot. In addition, the project examines how different levels of co-construction affect understanding. The first level involves simply observing the learning system. The second level introduces user scaffolding through feedback to the robot, without monitoring. Finally, at the highest level of co-construction, both the user and the system scaffold and monitor each other. The user observes both the system itself and the augmented reality visualizations, providing feedback as scaffolding, while the system adapts the visualizations in response to the user’s feedback.

Research areas: Computer science

Support Staff

Anna-Lena Rinke, Bielefeld University

Arthur Maximilian Noller, Bielefeld University

Mathis Tibbe, Bielefeld University

Publications

Components of an explanation for co-constructive sXAI

A.-L. Vollmer, H.M. Buhl, R. Alami, K. Främling, A. Grimminger, M. Booshehri, A.-C. Ngonga Ngomo, in: K.J. Rohlfing, K. Främling, S. Alpsancar, K. Thommes, B.Y. Lim (Eds.), Social Explainable AI, Springer, n.d.

Practices: How to establish an explaining practice

K.J. Rohlfing, A.-L. Vollmer, A. Grimminger, in: K. Rohlfing, K. Främling, K. Thommes, S. Alpsancar, B.Y. Lim (Eds.), Social Explainable AI, Springer, n.d.

The power of combined modalities in interactive robot learning

H. Beierling, R. Beierling, A.-L. Vollmer, Frontiers in Robotics and AI 12 (2025).

Human-Interactive Robot Learning: Definition, Challenges, and Recommendations

K. Baraka, I. Idrees, T.K. Faulkner, E. Biyik, S. Booth, M. Chetouani, D.H. Grollman, A. Saran, E. Senft, S. Tulli, A.-L. Vollmer, A. Andriella, H. Beierling, T. Horter, J. Kober, I. Sheidlower, M.E. Taylor, S. van Waveren, X. Xiao, Transactions on Human-Robot Interaction (n.d.).

Forms of Understanding for XAI-Explanations

H. Buschmeier, H.M. Buhl, F. Kern, A. Grimminger, H. Beierling, J.B. Fisher, A. Groß, I. Horwath, N. Klowait, S.T. Lazarov, M. Lenke, V. Lohmer, K. Rohlfing, I. Scharlau, A. Singh, L. Terfloth, A.-L. Vollmer, Y. Wang, A. Wilmes, B. Wrede, Cognitive Systems Research 94 (2025).

What you need to know about a learning robot: Identifying the enabling architecture of complex systems

H. Beierling, P. Richter, M. Brandt, L. Terfloth, C. Schulte, H. Wersing, A.-L. Vollmer, Cognitive Systems Research 88 (2024).

Advancing Human-Robot Collaboration: The Impact of Flexible Input Mechanisms

H. Beierling, K. Loos, R. Helmert, A.-L. Vollmer, Advancing Human-Robot Collaboration: The Impact of Flexible Input Mechanisms, Proc. Mech. Mapping Hum. Input Robots Robot Learn. Shared Control/Autonomy-Workshop RSS, 2024.

Technical Transparency for Robot Navigation Through AR Visualizations

L. Dyck, H. Beierling, R. Helmert, A.-L. Vollmer, in: Companion of the 2023 ACM/IEEE International Conference on Human-Robot Interaction, ACM, 2023, pp. 720–724.

Forms of Understanding of XAI-Explanations

H. Buschmeier, H.M. Buhl, F. Kern, A. Grimminger, H. Beierling, J. Fisher, A. Groß, I. Horwath, N. Klowait, S. Lazarov, M. Lenke, V. Lohmer, K. Rohlfing, I. Scharlau, A. Singh, L. Terfloth, A.-L. Vollmer, Y. Wang, A. Wilmes, B. Wrede, ArXiv:2311.08760 (2023).

Show all publications