The same explanation can be either too detailed or not detailed enough - depending on whom it is described to. In their model "SNAPE", the computer scientists of TRR 318 show how machines can master this problem and adapt their explanation strategies individually and in real-time.

"Even if a machine's explanation is correct and complete, this does not mean it will be understood. The success of an explanation also depends on the explainee, the person to whom the explanation is addressed to", says Amelie Robrecht, research assistant in the Transregio subproject A01 "Adaptive Explanation". With project leader Professor Dr. Stefan Kopp from the "Cognitive Systems and Social Interaction" research group at Bielefeld University, she has developed a real-time model for AI explanation strategies. The model considers different linguistic levels of an explanation and changes its strategy as the interaction progresses.

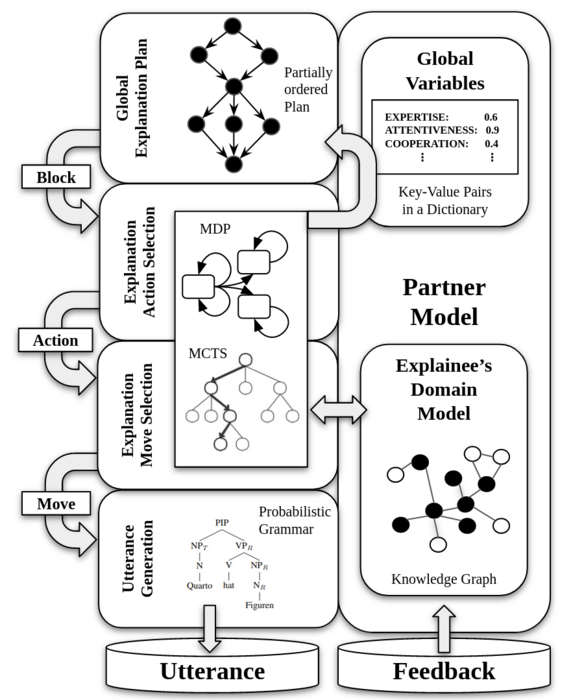

"Our model is important for current research on explainable artificial intelligence: AI systems should be equipped with personalized explanations that show their users how an AI works and why it acts the way it does," says Kopp. "To do this, the machine must be able to decide independently on the content of the explanation and choose an explanation strategy - for example, whether to deepen existing information or introduce new information. The tricky part is that the basis for this decision constantly changes during an explanation.”

Dynamic partner model as a basis for personalized explanation

To adapt the explanation to the information needs of the human partner, the machine uses a dynamic partner model: the partner model is constructed by the machine during the dialogue and modified if necessary. Ph.D. student Amelie Robrecht demonstrated this updating process in real time using simulated feedback behavior. She chose the fictional characters Harry, Ron, and Hermione. In the beginning, the machine was told Hermione's partner model. "Hermione is interested, focused, and an expert at everything. However, the feedback the model received on its explanations did not match Hermione, but rather Harry, who is easily distracted and has little expertise. The more feedback the model received, the more it changed its explanation strategy to match Harry," says Robrecht.

The model developed by the researchers is hierarchical - at the highest level, the next topic is determined, and the appropriate sentence is formed at the lowest level. Each level interacts with the current partner model to ensure a user-oriented explanation. The team is currently using: "+" (understood), "-" (not understood), and "None" (no answer) as response options. If users understand what is being explained, the model will receive plus points; if not, minus points. The model calculates new probabilities for the best explanation depending on user feedback.

Research paper for the International Conference on Agents and Artificial Intelligence

After developing and modeling the model, the computer scientists plan a study with real users. "We want to find out whether users are more satisfied with an automatic explanation if there is a system behind it that adapts during the interaction," says Robrecht.

Robrecht and Kopp have documented their research in the article "SNAPE: A Sequential Non-Stationary Decision Process Model for Adaptive Explanation Generation." The paper was presented at the 15th International Conference on Agents and Artificial Intelligence ICAART. Researchers, engineers, and practitioners gathered in Lisbon, Portugal, from 22 to 24 February to discuss theories and applications in the field.