Approximately a year ago, sociologist Nils Klowait asked ChatGPT to program a mouse-controlled game where a cat dodged hotdogs falling from the sky. At the time, the game’s purpose was to illustrate how ChatGPT could enable non-programmers to accomplish a broader range of technical tasks, and to discuss the wider sociological implications of such systems. However, Nils Klowait soon noticed that the game could be used for his own research. He and his colleagues have now been able to present their initial findings at the UCLA Co-Operative Action Lab, to be followed by upcoming publications in academic journals.

How did you integrate the simple computer game into your wider research?

NK: I have been playing around with ChatGPT since its very first public version – with new technologies it is never quite clear where they can and should be used. Over time, I began to explore the possibility of using ChatGPT for my research on co-construction. I was interested in the question of how AI systems can be transformed to be more understandable to a diversity of users and be responsive to their requirements. This was the genesis of the “AI explains AI” pilot project: can we use one artificial intelligence to help explain another? I modified the original cat-hotdog game to run autonomously – now there were many cats dodging many hotdogs, with a neural network controlling each cat.

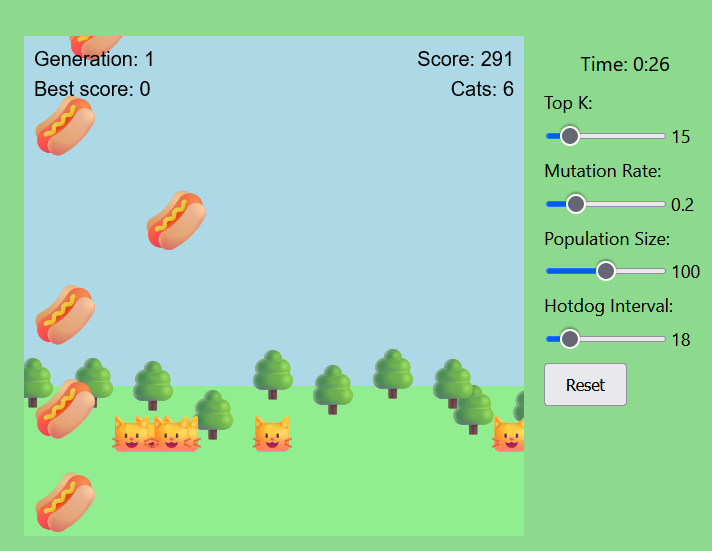

The system was based on reinforcement learning, where each new generation of cats would ‘evolve’ better strategies of avoiding the hotdogs and surviving as long as possible. The evolutionary process could be adjusted by the users based on sliders on the right. Certain settings would allow the cats to adapt more quickly, while wrong settings could crash the game entirely. The idea was to create a playful environment that is not immediately understandable to new users: while it may be clear what the “population size” slider does, what about “hotdog interval” and “Top K”? What does the “score” mean? Moreover, some sliders had an immediate visible effect – e.g. lowering the “hotdog interval” slider noticeably increases the number of hotdogs on the screen.

The aim was to create both a “huh?!”-moment, whilst also equipping people with enough tools to try and figure out what is going on. The twist was a final addition - ChatGPT itself.

To the very right I added a chat window, with GPT-4 – the most powerful conversational AI at the time – and instructions to help the users with any game-related questions they may have. The chatbot – I named it ‘Pythia’ – was supplied with detailed information about the game and has access to its full source code. The game thus simulates a situation that features several focal points of explainability research, allowing us to empirically investigate associated theoretical assumptions regarding explainable AI.

When placed in this confusing situation, what did the participants do to make sense of it?

NK: Will they just ask Pythia for help? Will they play around with the sliders? I had some expectations, but those rarely survive an encounter with reality. Thus, our pilot project was born. With the help of Ilona Horwath, Hendrik Buschmeier, Maria Erofeeva, and Michael Lenke, we set up a 20-minute online experiment where pairs of participants were placed in front of this system and had to accomplish one of four tasks randomly assigned to each pair:

- Interact with the system for 20 minutes.

- Reach a high score within 20 minutes.

- Interact with the system for 20 minutes. Explain evolutionary algorithms based on this example.

- Familiarize yourself with the system within 20 minutes. Explain how it works to Pythia – it will evaluate your understanding.

The setup was meant to vary common contexts of encountering such systems; people were invited in pairs in order to make them talk out loud to each other – we recorded what they said and did, as well as their interactions with the on-screen interface.

Did this work? Did participants actively engage with the explainer AI?

NK: We have a range of surprising initial findings. Generally, there is a huge difference between what the system designer imagined and the actual interaction. For example, some people initially thought that the aim of the game was to hit as many cats with hotdogs as possible. Other participants swore that they could control the cats by pressing keyboard buttons. Others speculated that Pythia was actually on the side of the cats and an adversary to the human participants. This highlights three crucial aspects: exploration, trust, and time.

Can you expand on the aspect of exploration?

NK: Participants do not necessarily adhere to the plans of the system designer: by playing around with and exploring such a system, they may arrive at unexpected conclusions about how it works, and what each part represents. They may think that the sliders do nothing, or that the game is secretly controlled by a human in another room. The same aspect applies to the explainer AI – even though Pythia introduced itself as an explainer AI and was consistently staying on-topic as an explainer AI, not all participants believed the responses. After all – one may conclude that these AIs are collaborating. In short, our participants tried to develop instruments to figure out what the system does, but did not necessarily use the tools we expected, or used them in unintended ways. They were a little bit like early scientists trying to develop experimental tools and procedures.

I see. And what is the role of trust in these encounters?

NK: Even if the role of the explainer is obvious, and even if it is providing accurate and relevant explanations, the participants may choose to not believe them. Like any tool for accomplishing something, ChatGPT may or may not be seen as reliable, especially given its occasional tendency to confidently make up absolute nonsense. More broadly, this trust may be affected by the longer-term relationships we form with these technologies – for instance, I grew to understand that my Alexa speaker is pretty silly, but I can somewhat rely on it to set a timer and play instrumental Jazz. I would never ask it to explain anything to me. Conversely, ChatGPT might be trusted more due to its eloquence and objectively greater capabilities. Fundamentally speaking, there is a recursion problem: using ChatGPT as an explainer for another AI might be a powerful toolset to enable more democratic human-AI encounters, but ChatGPT might need to become more transparent and explainable first. After all, participants need to be able to understand the limitations and capabilities of ChatGPT. In short, in our quest to understand whether an AI can explain another AI, we may have to resolve whether an AI must first explain itself.

Lastly, time seemed to play a substantial role in Pythia’s role in the exploration.

NK: Indeed! At the moment, ChatGPT-like systems are still predominantly chat-like. We need to type up a question and often wait quite a while for a response. I run in-person stakeholder co-construction workshops, where various people have the chance to critically explore artificial intelligence and discover ways to make their development more democratic. When my colleagues and I gave participants the opportunity to play around with the hotdog-cat system, some of the more numerous groups ended up only rarely talking to Pythia. I think that one of the reasons is that human social interaction is quite quick: we rapidly exchange phrases, there are overlaps, we use gestures and our bodies to communicate, we make quick changes to slider settings.

Looking at a video of any kind of multi-person interface interaction highlights how much stuff is happening at once. Participants would have to deliberately stop this rapid blow-by-blow interaction and carefully type out a question to Pythia, wait for a response, and then possibly ask a follow-up question. That kind of interaction requires focus and may become a bottleneck for exploration. I do not know how this will change over time – there are now voice-enabled versions of ChatGPT. Perhaps this is a matter of adjustment as well. In our data, people sometimes left Pythia behind to just observe what was happening on the screen whilst talking to the other humans. In some cases, people would first ask Pythia a series of questions and then scroll to Pythia’s answers when they became relevant – here, Pythia became almost like a guidebook rather than an equal-footed participant.

I think that future versions of these types of explainers need to take temporality seriously – the current temporal bottleneck of ChatGPT is only problematic if it is not considered during the interaction design; after all, we regularly write messages to each other and are not bothered by the fact that people take their time to respond.

Has interacting within the Co-Operative Action Lab given you new impetus for your research?

NK: My colleagues and I have been attending the co-operative action lab for years, and its influence on our work cannot be overstated. Each week, we look at different kinds of video-recorded data of human interaction, which are introduced by the researcher who has collected this material. It is an opportunity to receive insights from highly experienced scholars in the field. But more than the scholarly exchange, the key for me is the atmosphere: lab participants seem to be united by a mutual care and a never-ending curiosity for the complexity of the social world, be it an analysis of people hugging, a teacher explaining a physics phenomenon to students, or how a wheelchair-using participant employs eye-tracking to control a computer. Each meeting radiates this kindness and love for the diversity of the social world, it is honestly inspiring. Lab head Professor Marjorie H. Goodwin, of the UCLA Department of Anthropology, plays a key role in supporting this atmosphere and I am endlessly thankful to be part of the lab.