People use speech, facial expressions, and gestures to show whether they have understood an explanation. This multimodal communication should also be used in explanatory dialogs with AI systems. Researchers from TRR 318 presented their approaches and results in this area at the ICMI 2024, the 26th International Conference on Multimodal Interaction. Four members of the Transregio traveled to San José, Costa Rica, from November 4 to 8.

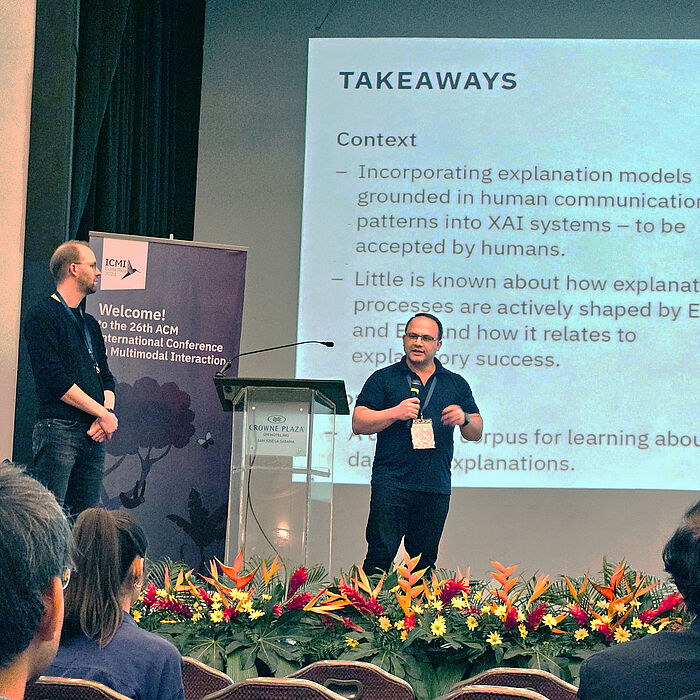

TRR 318 was involved on the first day of the conference with a workshop on a central research topic of the Transregio: Together with Professor Teena Hassan from Bonn-Rhein-Sieg University of Applied Sciences, the project leaders Professor Buschmeier and Professor Stefan Kopp organized a workshop on multimodal co-construction of explanations with XAI.

XAI, or Explainable Artificial Intelligence, aims to make AI systems transparent and understandable. "However, current methods are mainly aimed at experts," says Buschmeier. "Meanwhile, multimodal interaction research focuses on enabling meaningful communication between humans and AI systems. The workshop has therefore built a bridge between XAI and multimodal interaction."

The about 30 workshop participants examined interactive and social processes in explanation situations, focusing on spoken language, gaze and gestures. "Our aim was to see XAI explanations as a multimodal, interactive challenge. By networking between researchers in the fields of XAI and multimodal interaction, we are promoting work on understandable XAI systems," says Buschmeier.

At the workshop, Amit Singh, a psycholinguist and doctoral student of TRR 318 speaker Professor Katharina Rohlfing, presented his research on gaze behaviour during human-robot explanatory dialogue. "An online method, such as an eye tracker, makes it possible to study the cognitive basis of attention during an explanatory dialogue," says Singh. "Our analysis shows how attention to the partner and the task changes over time during the explanatory dialogue - a central focus of the investigation in project A05."

In addition, researchers from two other Transregio projects presented their research at the conference. Dr. Olcay Türk, a linguist in project A02, "Monitoring the understanding of explanations," and his project colleagues analyzed the predictability of understanding in explanatory situations based on multimodal cues. The team investigated how understanding can be identified from non-understanding by a classifier. "The results of our study show how relevant multimodal representations are in explanations," says Türk.

Meisam Booshehri presented a conceptual model of the most important factors contributing to the success of dialogue-based explanations from the INF project "Toward a framework for assessing explanation quality." Together with Professor Hendrik Buschmeier and Professor Philipp Cimiano, he characterized explanatory dialogues in which the explainer and the explained alternately drive the explanation process forward. The computer scientist annotated several hundred dialogue-based explanations. "We calculated the relationship between the explanation process and the success of an explanation and identified factors that contribute to the success of an explanation," says Booshehri.

The ICMI (ACM International Conference on Multimodal Interaction) is a scientific conference focusing on multimodal interaction and communication. It brings together researchers from various disciplines, such as computer science, cognitive science, and linguistics. It aims to make human interactions with machines more natural and effective.

Workshop Multimodal Co-Construction of Explanations with XAI

Research article with participation of TRR 318:

-

Booshehri, M., Buschmeier, H., & Cimiano, P. (2024). A model of factors contributing to the success of dialogical explanations. Proceedings of the 26th ACM International Conference on Multimodal Interaction, 373-381. https://doi.org/10.1145/3678957.3685744

-

Türk, O., Lazarov, S., Wang, Y., Buschmeier, H., Grimminger, A., & Wagner, P. (2024). Predictability of comprehension in explanatory interactions based on multimodal cues. Proceedings of the 26th ACM International Conference on Multimodal Interaction, 449-458. https://doi.org/10.1145/3678957.3685741

-

Singh, A., & Rohlfing K. J. (2024). Coupling task and partner models: Investigating intra-individual variability in gaze during human-robot explanatory dialogue. ICMI Companion '24: Companion Proceedings of the 26th International Conference on Multimodal Interaction, 218-224. https://doi.org/10.1145/3686215.3689202

Review article on the workshop

Buschmeier, H., Kopp, S., & Hassan, T. (2024). Multimodal Co-Construction of Explanations with XAI Workshop. Proceedings of the 26th ACM International Conference on Multimodal Interaction, 698-699. https://doi.org/10.1145/3678957.3689205