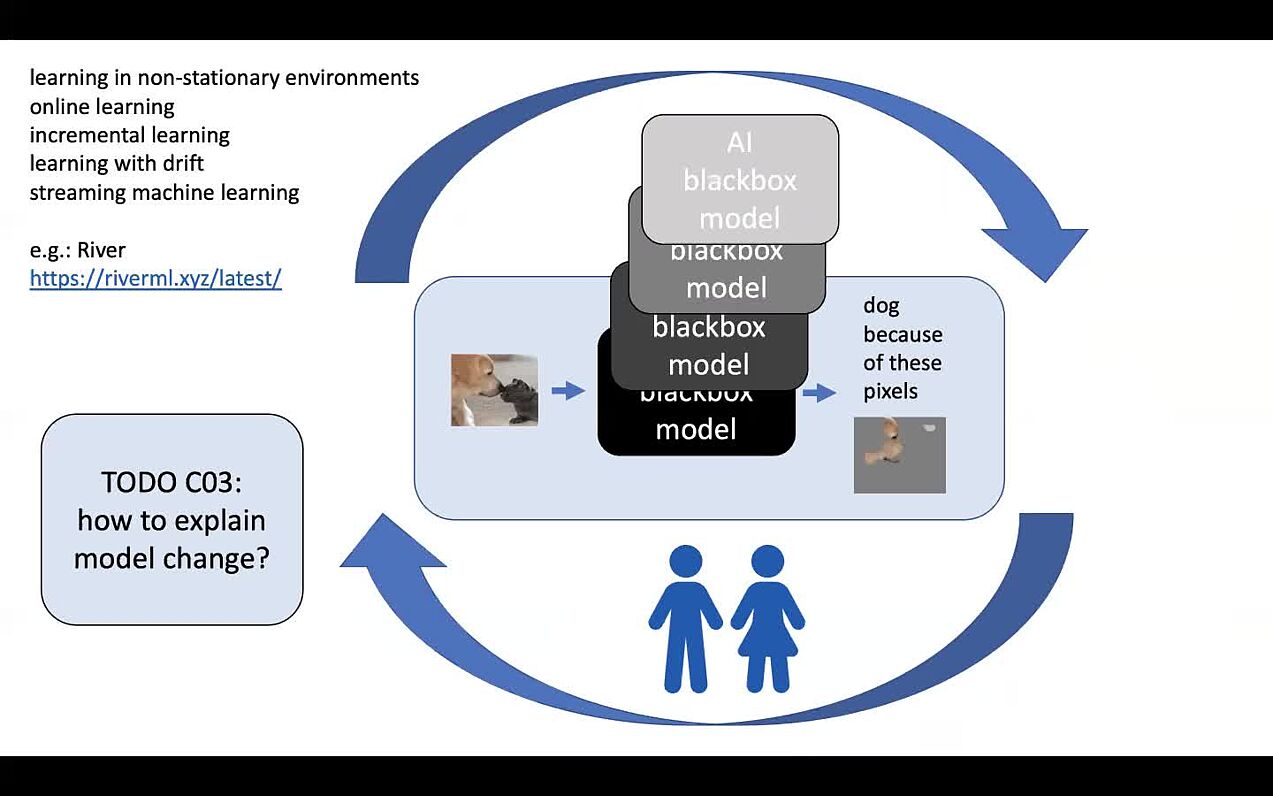

Project C03: Interpretable machine learning: Explaining Change

Today, machine learning is commonly used in dynamic environments such as social networks, logistics, transportation, retail, finance, and healthcare, where new data is continuously being generated. In order to respond to possible changes in the underlying processes and to ensure that the models that have been learned continue to function reliably, they must be adapted on a continuous basis. These changes, like the model itself, should be kept transparent by providing clear explanations for users. For this, application-specific needs must be taken into account. The researchers working on Project C03 are considering how and why different types of models change from a theoretical-mathematical perspective. Their goal is to develop algorithms that efficiently and reliably detect changes in models and provide intuitive explanations to users.

Research areas: Computer science

Publications

Agnostic Explanation of Model Change based on Feature Importance

M. Muschalik, F. Fumagalli, B. Hammer, E. Huellermeier, KI - Künstliche Intelligenz 36 (2022) 211–224.

“I do not know! but why?” — Local model-agnostic example-based explanations of reject

A. Artelt, R. Visser, B. Hammer, Neurocomputing 558 (2023).

SHAP-IQ: Unified Approximation of any-order Shapley Interactions

F. Fumagalli, M. Muschalik, P. Kolpaczki, E. Hüllermeier, B. Hammer, in: NeurIPS 2023 - Advances in Neural Information Processing Systems, Curran Associates, Inc., 2023, pp. 11515--11551.

Incremental permutation feature importance (iPFI): towards online explanations on data streams

F. Fumagalli, M. Muschalik, E. Hüllermeier, B. Hammer, Machine Learning 112 (2023) 4863–4903.

iSAGE: An Incremental Version of SAGE for Online Explanation on Data Streams

M. Muschalik, F. Fumagalli, B. Hammer, E. Huellermeier, in: Machine Learning and Knowledge Discovery in Databases: Research Track, Springer Nature Switzerland, Cham, 2023.

On Feature Removal for Explainability in Dynamic Environments

F. Fumagalli, M. Muschalik, E. Hüllermeier, B. Hammer, in: ESANN 2023 Proceedings, i6doc.com publ., 2023.

iPDP: On Partial Dependence Plots in Dynamic Modeling Scenarios

M. Muschalik, F. Fumagalli, R. Jagtani, B. Hammer, E. Huellermeier, in: Communications in Computer and Information Science, Springer Nature Switzerland, Cham, 2023.

Beyond TreeSHAP: Efficient Computation of Any-Order Shapley Interactions for Tree Ensembles

M. Muschalik, F. Fumagalli, B. Hammer, E. Huellermeier, Proceedings of the AAAI Conference on Artificial Intelligence 38 (2024) 14388–14396.

SVARM-IQ: Efficient Approximation of Any-order Shapley Interactions through Stratification

P. Kolpaczki, M. Muschalik, F. Fumagalli, B. Hammer, E. Huellermeier, in: S. Dasgupta, S. Mandt, Y. Li (Eds.), Proceedings of The 27th International Conference on Artificial Intelligence and Statistics, PMLR, 2024, pp. 3520–3528.

KernelSHAP-IQ: Weighted Least Square Optimization for Shapley Interactions

F. Fumagalli, M. Muschalik, P. Kolpaczki, E. Hüllermeier, B. Hammer, in: R. Salakhutdinov, Z. Kolter, K. Heller, A. Weller, N. Oliver, J. Scarlett, F. Berkenkamp (Eds.), Proceedings of the 41st International Conference on Machine Learning, PMLR, 2024, pp. 14308–14342.

Show all publications