Computational linguists at TRR 318 are studying dialogues representing five different levels of explanation. They will present their findings at the COLING international conference.

“Most research on explainable artificial intelligence operates from the assumption that there is an ideal explanation with which the processes behind AI become intelligible. That said, explanations in everyday life work in an entirely different way: they are co-constructed between the explainer and the explainee. We need greater insight into how people explain things in dialogue with each other. Only then artificial intelligence can learn to interact with humans,” says Professor Dr. Henning Wachsmuth, a computer linguist at Leibniz University Hannover (previously at Paderborn University). Wachsmuth is heading the C04 and INF subprojects at TRR 318.

In their first study as part of the INF subproject, Wachsmuth and colleague Milad Alshomary used computational methods to examine a number of dialogue-based explanations for how humans explain and analyzed how AI can mimic this process. “In doing so, we were able to gather empirical data on how experts adjust their explanations to different levels based on their interlocutors,” says Milad Alshomary, a doctoral researcher at TRR 318. “Using a language processing program, we have also developed the first systematic predictions of the explanatory flow in dialogues.”

English explanatory dialogue as basis for the study

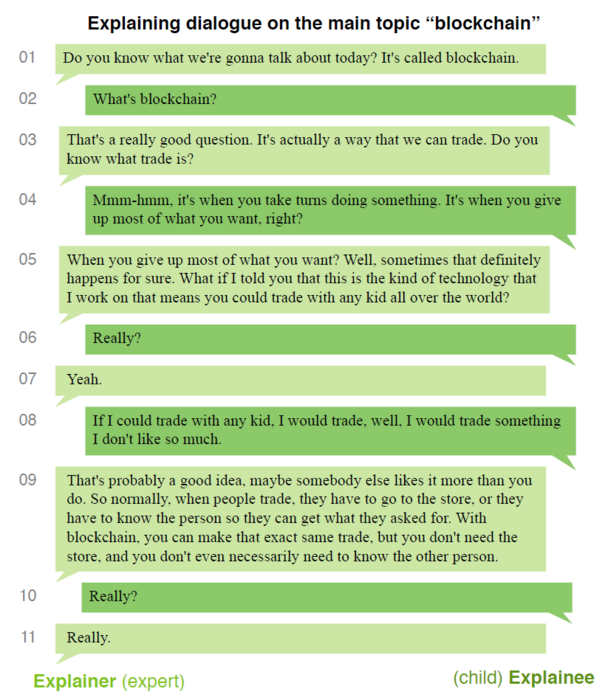

In their study, Wachsmuth and Alshomary analyzed 65 English-language dialogues from the Wired video series “5 Levels,” where experts explain science-related topics at five different levels of difficulty. First, they describe, for instance, the concept of a blockchain to a child and then to a teenager, and after this, to people who studied and completed a doctorate, and finally to a colleague. The researchers had independent experts manually and systematically annotate the dialogues, which had already been converted into text form. This annotation encompassed three levels of analysis: the relationship of the topic to the main topic (e.g., subtopic or related topic); the dialogue act (e.g., asking questions for understanding, or making an informative statement), and the explanation move (e.g., testing prior knowledge or explaining). For each of these three dimensions, the researchers developed predictive models that they tested experimentally. “Our initial experiments suggest that it is feasible to make predictions based on these annotated dimensions,” explains Wachsmuth. “With our findings, we are laying the foundation for human-centered, explainable AI in which intelligent systems can learn how to respond to people’s reactions and adjust their explanations accordingly.”

Research paper for upcoming conference

Wachsmuth and Alshomary summarized the data they have compiled and their findings in their article “Mama Always Had a Way of Explaining Things So I Could Understand: A Dialogue Corpus for Learning to Construct Explanations.” From October 12-17th, they will present their paper at the 29th International Conference on Computational Linguistics (COLING) in Gyeongju, South Korea. COLING is held every two years and is one of the most important international conferences in the field of natural language processing.