Research profile of TRR 318

Algorithm-based approaches, such as machine learning, are becoming increasingly complex. This lack of transparency only makes it more difficult for human users to understand and accept the decisions proposed by Artificial Intelligence (AI). In response to these societal challenges, computer scientists have begun to develop self-explanatory algorithms that provide intelligent explanations (so-called “Explainable Artificial Intelligence” – XAI). XAI programs, however, only interact with humans to a limited extent and do not take into account the given context or the types of information the humans being addressed need. This runs the risk of generating AI explanations that will not be understood by the human user.

Members of the Transregional Collaborative Research Centre “Constructing Explainability” (TRR 318) are challenging this view by instead conceiving of explanation as a process of co-construction: in this model, the person receiving the explanation takes an active role in the AI explanatory process and co-constructs both the goal and the process of explanation. In a collaborative, interdisciplinary approach, the mechanisms of explainability and explanations are being investigated by 21 project leads assisted by approximately 50 researchers from fields as diverse as computer science, economics, linguistics, media science, philosophy, psychology and sociology.

The findings of the research efforts from TRR 318 will contribute to the development of:

- A multi-disciplinary understanding of the explanatory process linked to the process of understanding and the contextual factors influencing it.

- Computer models and complex Artificial Intelligence systems that can generate situation-specific and efficient explanations for their addressees.

- A theory of explanation as social practice that considers the expectations of the person being communicated with, as well as their role in the interaction.

These foundations for explainable and understandable Artificial Intelligence systems will enable greater active and critical participation in the digital world.

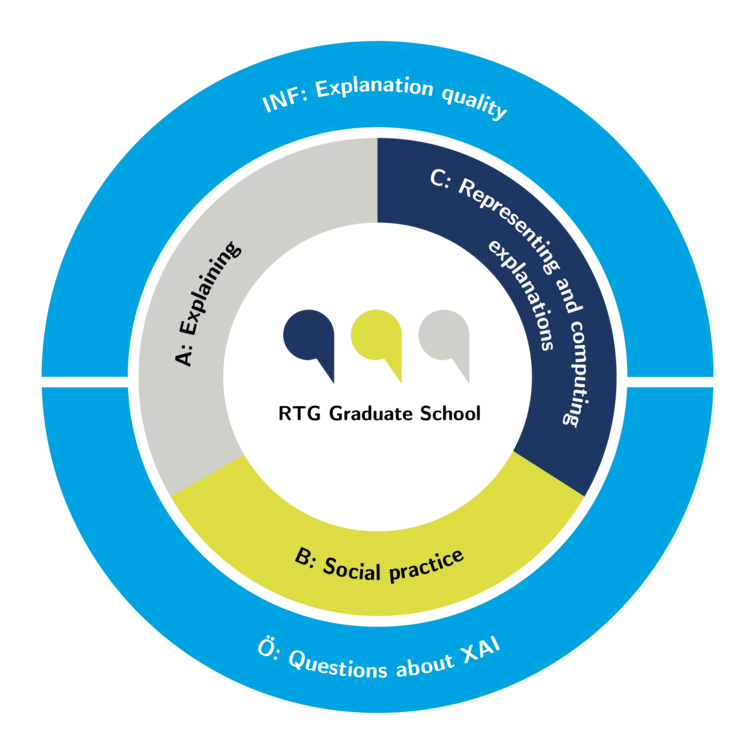

The areas of research included in TRR 318 are divided into three categories: (A) “Explaining”; (B) “Social practice”; and (C) “Representing and computing explanations”. These three areas in turn are subdivided into interdisciplinary subprojects.

Project INF provides an overarching research structure; Project Ö facilitates public relations and outreach; Project Z addresses administrative, organizational, and financial matters; and the RTG Project provides a framework for educating doctoral and post-doctoral researchers.